1. Why the AI-Ready Platform Matters

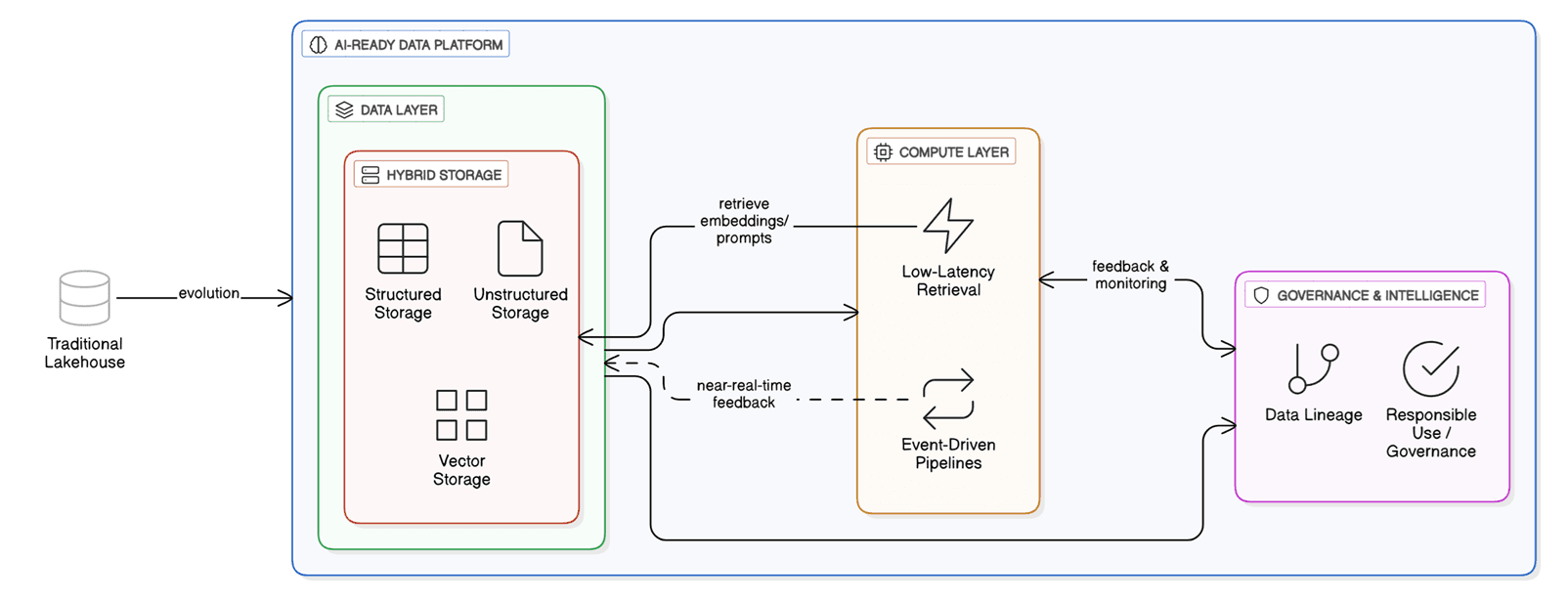

Every enterprise wants to “do AI,” but few have a platform truly ready for it. An AI-ready data platform isn’t just about adding vector search or calling an LLM API — it’s about building a foundation where data, compute, and intelligence evolve together.

Traditional lakehouses handle data; modern AI workloads demand:

- Low-latency retrieval for embeddings and prompts

- Data lineage and governance for responsible use

- Scalable hybrid storage (structured + unstructured + vector)

- Event-driven pipelines for near-real-time model feedback

2. Reference Architecture Overview

┌───────────────────────────────┐

│ Experience & Delivery Layer │

│ - AI apps / chatbots │

│ - Dashboards / APIs │

└───────────────┬───────────────┘

│

┌───────────────▼───────────────┐

│ Intelligence Layer │

│ - Feature Store │

│ - Vector Index (Redis/Cosmos) │

│ - Model Serving (Azure AI) │

└───────────────┬───────────────┘

│

┌───────────────▼───────────────┐

│ Data Platform Layer │

│ - Azure Data Lake / Synapse │

│ - PostgreSQL / Cosmos DB │

│ - Kafka / Event Hubs │

└───────────────┬───────────────┘

│

┌───────────────▼───────────────┐

│ Resilience & Governance Layer │

│ - Observability, Compliance │

│ - Policy-as-Code (OPA) │

│ - MLOps + AIOps telemetry1 │

└───────────────────────────────┘3. Key Building Blocks

a. Data Foundation

- PostgreSQL / Synapse for relational analytics

- Cosmos DB (Mongo API) for multimodel operational stores

- Data Lake (Parquet) for cost-efficient archival

- Event Hubs / Kafka for streaming pipelines

Design every dataset with metadata contracts (JSON schema + lineage tags).

b. Intelligence & Vector Layer

LLMs are useless without high-quality retrieval. Embed your enterprise data and push vectors to a specialized store:

from openai import OpenAI

from redisvl import Client

client = OpenAI()

text = "Explain Basel III liquidity ratios"

embedding = client.embeddings.create(input=text, model="text-embedding-3-small")

redis = Client(host="redis-vector.azure.com", port=6380, ssl=True)

redis.ft().create_index("idx:docs", prefix="doc:", fields=[("embedding", "VECTOR", "FLAT", 1536)])

redis.hset("doc:1", mapping={

"text": text,

"embedding": embedding.data[0].embedding

})- Store vectors in Redis Enterprise or Cosmos DB (vCore Mongo + vector index)

- Use hybrid retrieval — combine semantic (vector) and keyword filters

- Cache LLM responses in Redis for deterministic answers

c. Resilience & Governance

Resilience is not DR — it’s predictable degradation. Implement layered controls:

- Policy-as-Code: enforce encryption, PII masking

- Chaos Testing: simulate DB latency, node failures

- Observability: trace data-model lineage via OpenTelemetry

Example: Resilience Pipeline YAML

name: ai-data-chaos-test

trigger: nightly

jobs:

- chaos:

uses: azure/chaos-studio@v1

parameters:

target: cosmosdb

fault: latency

duration: 300s

- verify:

script: |

curl -X GET "$OBS_API/health?component=vector-layer"

exit $?4. Workflow — From Data to Insight

| Stage | Tool | Purpose |

|---|---|---|

| Ingest | Event Hubs / ADF | Stream data from APIs, logs, and transactions |

| Transform | Synapse / Databricks | Clean, enrich, and prepare features |

| Embed | Azure OpenAI + Vector DB | Generate embeddings for semantic retrieval |

| Serve | Cosmos DB / Redis | Real-time inference and caching |

| Govern | Purview + Policy-as-Code | Audit, lineage, and explainability |

| Observe | App Insights + KQL | Continuous learning & anomaly detection |

5. Governance by Design

- Lineage: Azure Purview tracks datasets → embeddings → models

- Explainability: each inference stores

prompt,embedding_id, andresult_hash - Ethical Guardrails: integrate OpenAI Moderation or internal classifiers

Example KQL (query unapproved embeddings):

AIOpsLogs

| where VectorSource !in ("approved_knowledgebase","faq_embeddings")

| summarize count() by VectorSource6. Scaling for Multi-Cloud Reality

- Containerize AI pipelines with AKS / Kubernetes

- Deploy storage via Terraform + Bicep modules

- Enable data mesh domains with cross-region access policies

7. Key Metrics of Maturity

| Dimension | Starter | Advanced | AI-Ready |

|---|---|---|---|

| Data Integration | Manual ETL | Stream + Batch | Event-Driven + Schema Registry |

| Model Ops | Scripted | CI/CD Deployment | Continuous Learning Loops |

| Resilience | Backups | Multi-AZ | Chaos + Auto-Remediation |

| Governance | Manual Review | Purview | Policy-as-Code + Lineage APIs |

| AI Use | POCs | Tactical Apps | Embedded Enterprise AI |

8. The Leadership View

- Invest in people and processes, not just tools.

- Treat data as a product with SLAs.

- Build feedback loops between business outcomes and ML pipelines.

- Promote a culture of experimentation, balanced by compliance.

9. Conclusion

The AI-Ready Data Platform is the nervous system of modern enterprises. It connects: Data (Truth), Intelligence (Insight), and Governance (Trust). Building it requires architectural clarity, leadership intent, and a relentless focus on resilience.